Transformer常见八股及手撕

Transformer详解:Transformer模型详解(图解最完整版) - 知乎 (zhihu.com)

手写Attention讲解视频:手写self-attention的四重境界-part1 pure self-attention_哔哩哔哩_bilibili

手写Transformer Decoder讲解视频:一个视频讲清楚 Transfomer Decoder的结构和代码,面试高频题_哔哩哔哩_bilibili

常见八股

Transformer 的 attention 除以根号 k 的原因

防止点积过大导致softmax梯度消失

为什么是$\sqrt{d_k}$而不是其他?

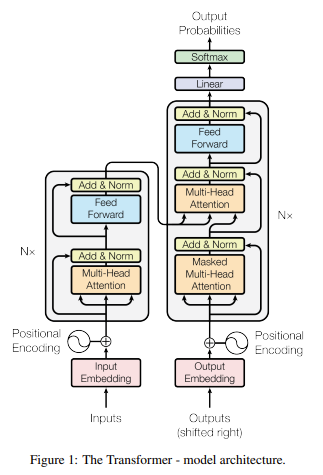

transformer的结构是什么?

为什么transformer用LN而不用BN?

Transformer使用Layer Normalization(LN)而非Batch Normalization(BN),主要有以下原因:

- 序列长度可变性

- Transformer处理变长序列(如NLP中的不同长度句子)

- BN在batch维度归一化,但同一特征在不同序列位置具有不同统计意义

- LN在特征维度归一化,对每个样本单独处理,不受序列长度影响

- batch大小不稳定性

- BN在小batch时效果差(统计估计不准)

- 训练与推理差异:训练用batch统计,推理用全局统计,存在不一致

- LN不依赖batch,训练/推理行为一致

- 序列建模特性

- NLP任务中,同一特征在不同位置应有相同分布

- 例如:词嵌入”apple”在句首/句末应保持相似表示

- LN对每个位置的所有特征归一化,保留位置间可比性

- BN会混合不同位置信息,破坏位置独立性

- 训练稳定性

- BN对batch内异常样本敏感(如一个长序列影响整个batch统计)

- LN样本独立,不受batch内其他样本影响

- Transformer训练通常用大学习率+预热策略,LN配合更稳定

- 计算效率

- LN只需计算每个样本的均值/方差,简单高效

- BN需维护running_mean/running_var,增加复杂度

- 对Transformer的自注意力机制,LN可并行计算,更适配

- 理论适配性

- LN公式:LN(x)=γ⋅σx−μ+β

- 在Transformer的残差连接中,LN放在注意力/FFN之前(Pre-LN)或之后(Post-LN)

- 可稳定深层梯度流,缓解梯度消失/爆炸

核心对比

| 特性 | LN(Transformer使用) | BN(CNN常用) |

|---|---|---|

| 归一化维度 | 特征维度(C) | Batch维度(N) |

| batch依赖 | 无依赖 | 强依赖 |

| 变长序列 | 支持良好 | 难以处理 |

| 训练/推理 | 完全一致 | 存在差异 |

| 计算开销 | 较低 | 较高 |

总结

Transformer选择LN的根本原因是其序列建模特性与训练稳定性需求。LN的样本独立性、位置不变性、batch无关性,更适配自注意力机制和变长序列处理,这是BN无法满足的。

Transformer手撕

手写Attention

1 | import torch |

手写Decoder

1 | import torch |

手写位置编码

1 | import torch |

手写MHA

1 | import torch |

mask:用于处理padding或实现因果注意力

完整流程:

输入x [batch, seq, d_model]

↓

Q = x·W_q, K = x·W_k, V = x·W_v

↓

分割多头:[batch, seq, d_model] → [batch, seq, heads, d_k] → [batch, heads, seq, d_k]

↓

计算注意力分数:Q·K^T/√d_k → softmax → 权重×V

↓

合并多头:[batch, heads, seq, d_k] → [batch, seq, d_model]

↓

输出投影:context·W_o

↓

输出 [batch, seq, d_model]

手写GQA

1 | import torch |

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 SpongeBob's Blog!